Serving Large Models: Part 2 - Exploring Ollama and TGI

In the first part of our "Serving Large Models" series, we explored powerful tools like VLLM, LLAMA CPP Server, and SGLang, each offering efficient ways to deploy large language models (LLMs). One standout feature across these tools is their ability to provide an OpenAI-compatible API, which makes integration with existing systems seamless. This capability allows developers to easily plug in these open-source alternatives while maintaining compatibility with well-established frameworks like LangChain and LlamaIndex.

In this second part, we continue our exploration with two more tools gaining traction in LLM deployment: Ollama and Text Generation Inference (TGI). Both of these solutions also offer OpenAI-compatible APIs, making it easy to leverage them in applications that require flexible, high-performance AI models without cloud dependency.

Ollama: Simplifying Local LLM Deployment

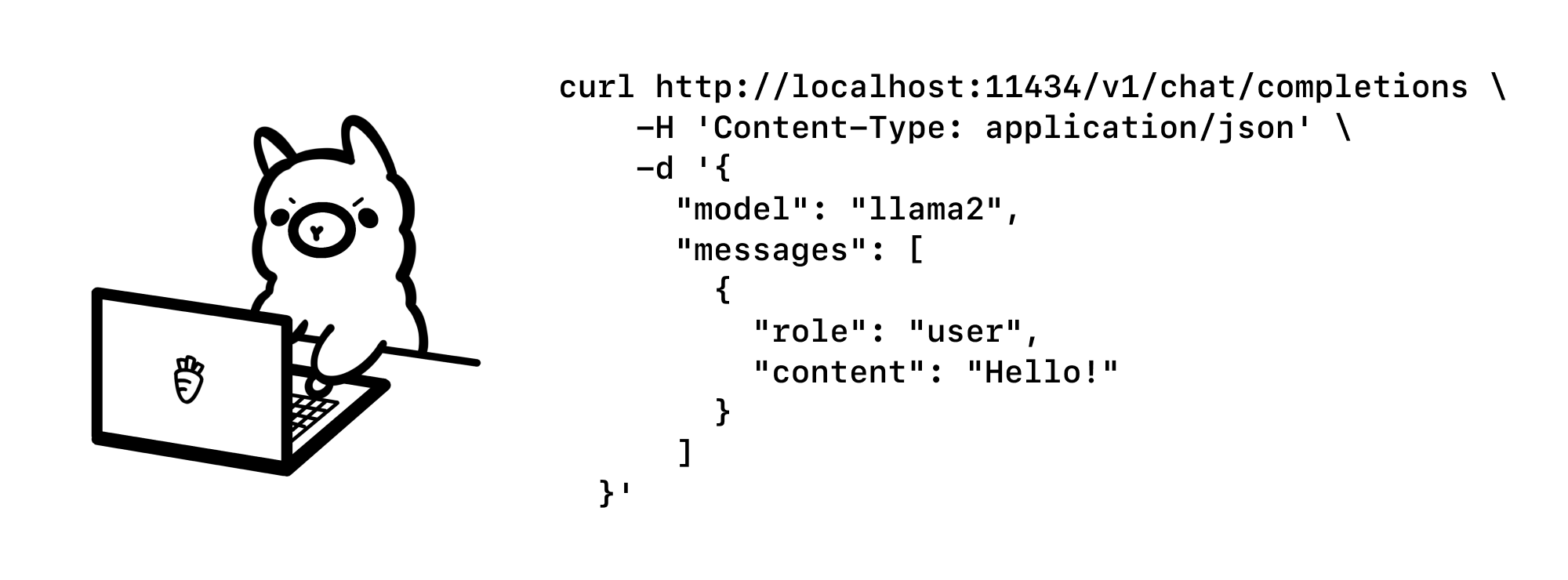

Ollama is an open-source platform designed to simplify the deployment of large language models on your local machine. It acts as a bridge between the complex infrastructure typically required for LLMs and users seeking a more accessible and customizable AI experience. With its OpenAI-compatible API, Ollama integrates seamlessly with existing tools and workflows.

The platform emphasizes support for quantized models, which are crucial for reducing memory usage and improving performance. Ollama offers a growing library of pre-trained models, from versatile general-purpose options to specialized models for niche tasks. Notable models available include Llama 3.1, Qwen, Mistral, and specialized variants like deepseek-coder-v2.

Ollama's user-friendly setup process and intuitive model management are facilitated by its unified Modelfile format. Its cross-platform support, including Windows, macOS, and Linux, further enhances accessibility. By providing local model serving with an OpenAI-compatible interface, Ollama is a robust choice for developers seeking the flexibility of local deployment along with the ease of standard API integrations.

Code Example

Installing Dependencies and Ollama

To start, we need to install the openai Python package. This library provides a simple interface to interact with any OpenAI-compatible API, which will be key when we connect to the Ollama server later.

pip install openai

Next, we install Ollama (For Linux) using a single command. Ollama is designed to simplify the process of running local LLMs, so setting it up is quick and straightforward.

curl -fsSL https://ollama.com/install.sh | sh

Pulling and Serving a Model with Ollama

Once Ollama is installed, we pull the qwen2:1.5b model. This model is one of the many supported by Ollama, and it demonstrates the tool’s capability to manage and run various large language models locally.

ollama pull qwen2:1.5b

After pulling the model, we start the Ollama server. This allows us to serve the model locally and access it via an API.

Making a Simple Request to Ollama Using the OpenAI Client

Here, we set up the OpenAI client to communicate with the Ollama server. The base_url points to the local server, while the api_key is required but not used by Ollama.

ollama serve

We then send a chat completion request to the qwen2:1.5b model, which is the model we pulled above.

from openai import OpenAI

client = OpenAI(

base_url = 'http://127.0.0.1:11434/v1',

api_key='ollama',

)

response = client.chat.completions.create(

model="qwen2:1.5b",

messages=[

{"role": "system", "content": "You are a helpful assistant." },

{"role": "user", "content": "What is deep learning?"}

]

)

print(response.choices[0].message.content)

Streaming Responses with the OpenAI API

The client is configured the same way, but this time we are streaming responses as they’re generated. This is particularly useful for real-time applications where you need to process tokens as they come in.

from openai import OpenAI

# init the client but point it to TGI

client = OpenAI(

base_url="http://127.0.0.1:11434/v1",

api_key="ollama"

)

chat_completion = client.chat.completions.create(

model="qwen2:1.5b",

messages=[

{"role": "system", "content": "You are a helpful assistant." },

{"role": "user", "content": "What is deep learning?"}

],

stream=True

)

# iterate and print stream

for message in chat_completion:

print(message.choices[0].delta.content)

Text Generation Inference (TGI): High-Performance LLM Serving

Text Generation Inference (TGI) is a production-ready toolkit for deploying and serving LLMs with a focus on high-performance text generation. Like other tools discussed in this series, TGI offers an OpenAI-compatible API, making it easy to integrate advanced LLMs into existing applications.

TGI supports popular open-source models like gemma2, mistral-nemo, mistral-large ,qwen, and more, making it an attractive choice for organizations that require scalable, high-throughput AI solutions. Key features include tensor parallelism for faster inference across multiple GPUs, token streaming with Server-Sent Events (SSE), and continuous batching to maximize throughput.

In addition to these capabilities, TGI implements advanced optimizations like Flash Attention and Paged Attention, which are essential for improving inference speed on transformer architectures. It also supports model quantization through techniques like bitsandbytes and GPT-Q, enabling efficient deployments while preserving model performance. For customized tasks, TGI provides fine-tuning support and guidance features, further enhancing the relevance and accuracy of outputs.

With its robust feature set, including distributed tracing and Prometheus metrics for monitoring, TGI is an ideal solution for serving LLMs in demanding production environments.

Code Example

Installing TGI Locally

First, we set up the environment by installing necessary dependencies, including libssl-dev and gcc. We also download and install the Protocol Buffers (protoc) package, which is essential for compiling protobuf files used by the TGI framework.

sudo apt-get install libssl-dev gcc -y

PROTOC_ZIP=protoc-21.12-linux-x86_64.zip

curl -OL https://github.com/protocolbuffers/protobuf/releases/download/v21.12/$PROTOC_ZIP

sudo unzip -o $PROTOC_ZIP -d /usr/local bin/protoc

sudo unzip -o $PROTOC_ZIP -d /usr/local 'include/*'

rm -f $PROTOC_ZIP

Building and Running TGI Locally

To install and run TGI, you build the repository with extensions (CUDA kernels) enabled for optimal performance on GPUs. After that, you can launch the text-generation service with any model supported by Hugging Face’s ecosystem, like Mistral.

BUILD_EXTENSIONS=True make install

text-generation-launcher --model-id mistralai/Mistral-Large-Instruct-2407

To run a different model, simply change the --model-id argument:

text-generation-launcher --model-id Qwen/Qwen2-1.5B-Instruct

Running with Quantization

You can reduce VRAM usage by running pre-quantized weights or using on-the-fly quantization options like AWQ, GPTQ, or bitsandbytes. This is particularly useful when dealing with large models on limited hardware.

text-generation-launcher --model-id Qwen/Qwen2-72B-Instruct-GPTQ-Int8 --sharded true --num-shard 2 --quantize gptq

Using Docker for TGI Deployment

If you prefer using Docker, you can deploy TGI easily by mounting a volume for model data and exposing the server’s port:

model=Qwen/Qwen2-1.5B-Instruct

volume=$PWD/data

Then, run the Docker container with GPU access:

docker run --gpus all --shm-size 1g -p 8080:80 -v $volume:/data \

ghcr.io/huggingface/text-generation-inference:2.2.0 --model-id $model

You can test the deployment by sending a request to generate text:

curl 127.0.0.1:8080/generate_stream \

-X POST \

-d '{"inputs":"What is Deep Learning?","parameters":{"max_new_tokens":20}}' \

-H 'Content-Type: application/json'

OpenAI-Compatible API Support

One of the significant features of TGI (from version 1.4.0 onwards) is its compatibility with the OpenAI Chat Completion API. This allows you to use OpenAI’s client libraries or other libraries expecting the same schema to interact with TGI’s Messages API.

Making a Synchronous Request

Here, we set up the OpenAI client but point it to the TGI endpoint. We then create a chat completion request similar to what you’d do with the OpenAI API.

from openai import OpenAI

# init the client but point it to TGI

client = OpenAI(

base_url="http://localhost:3000/v1",

api_key="-"

)

chat_completion = client.chat.completions.create(

model="tgi",

messages=[

{"role": "system", "content": "You are a helpful assistant." },

{"role": "user", "content": "What is deep learning?"}

],

stream=False

)

print(chat_completion.choices[0].message.content)

Making a Streaming Request

For real-time applications, you can stream responses as they’re generated. The OpenAI client handles this by iterating over each part of the stream, enabling you to process and display results dynamically.

from openai import OpenAI

# init the client but point it to TGI

client = OpenAI(

base_url="http://localhost:3000/v1",

api_key="-"

)

chat_completion = client.chat.completions.create(

model="tgi",

messages=[

{"role": "system", "content": "You are a helpful assistant." },

{"role": "user", "content": "What is deep learning?"}

],

stream=True

)

# iterate and print stream

for message in chat_completion:

print(message)

This flexibility makes TGI a powerful tool for integrating large language models into diverse applications while maintaining compatibility with familiar OpenAI APIs.

In this part, we looked at how Ollama and Text Generation Inference (TGI) make it easier to deploy and serve large language models. Ollama focuses on simplifying local setups, while TGI is built for high-performance and production use. Both tools offer OpenAI-compatible APIs, making integration straightforward and flexible. Combined with the tools from the first part of this series, you now have a solid toolkit for running large models, whether it’s for local testing or full-scale deployment.