Embark on Your Agent-Building Journey: A Simple Search Agent with Ollama (Part 1)

This blog post kicks off a series dedicated to building AI agents from the ground up. In this first installment, we'll explore a basic implementation of a search agent powered by Ollama, a fantastic tool for running large language models (LLMs) locally. Our goal is to demonstrate the fundamental concepts of agent design by creating a system that can understand user queries and leverage web search to provide informative responses.

While this implementation serves as an excellent starting point for understanding agent development, it's important to note that it's a simplified version. In real-world scenarios, you'd likely encounter more complex agent behaviors that might require robust parsing techniques to handle unexpected outputs and comprehensive error handling strategies to ensure smooth operation.

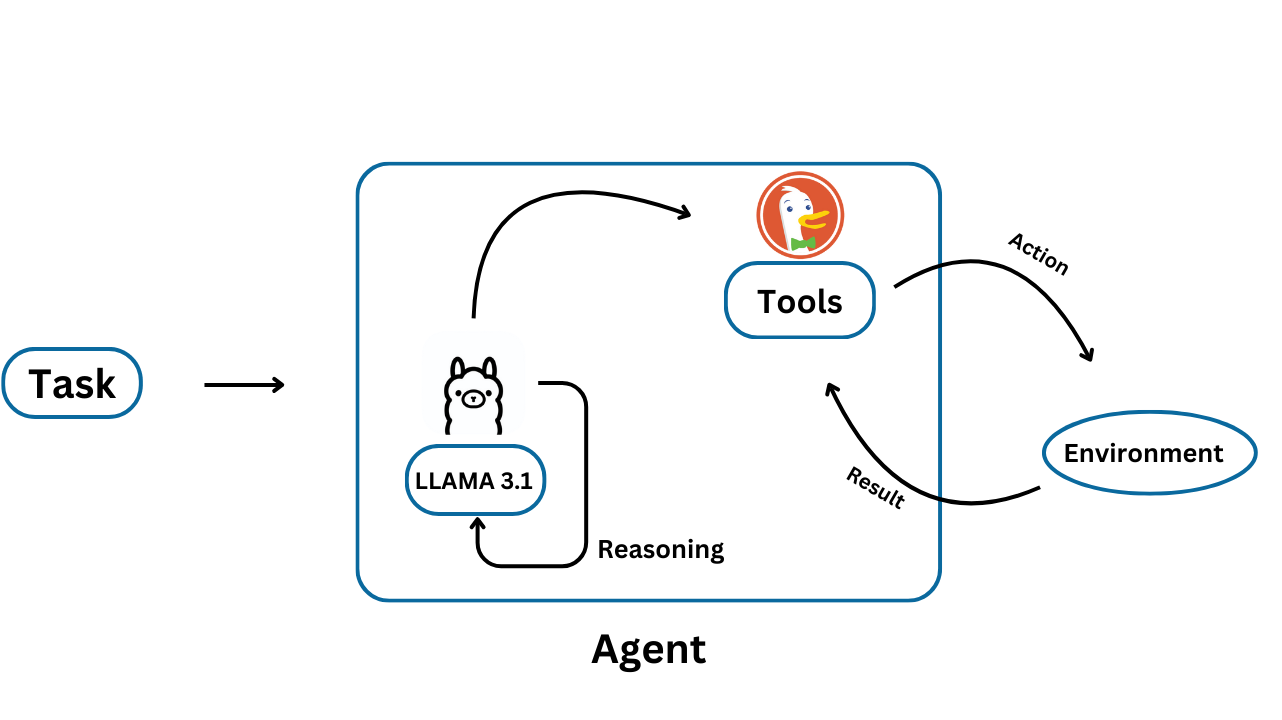

How It works

-

Agent: This represents the overall system that encompasses the LLM and its interactions with the environment.

-

Task: This is the objective or goal that the agent is trying to achieve. It's the starting point of the process.

-

LLM: This is the core of the agent, a large language model that processes information and makes decisions.

-

Reasoning: The LLM engages in reasoning to determine the best course of action based on the task and available information.

-

Tools: The agent can utilize various tools (like web search, calculators, or databases) to gather additional information or perform actions.

-

Action: Based on its reasoning, the LLM decides on an action to take within its environment.

-

Environment: This represents the external world or context in which the agent operates. It could be a website, a physical space, or a simulated environment.

-

Result: The action taken by the agent produces a result within the environment, which then feeds back into the agent's understanding and future actions.

An LLM agent, like the one depicted in the diagram, operates by receiving a task and employing its reasoning capabilities to determine the best course of action. It can leverage various tools to gather additional information or perform specific actions. Based on its reasoning, the agent takes action within its environment, and the outcome of this action provides feedback that informs future decisions and actions, creating a continuous learning loop.

Code Example

Setting up Ollama and Dependencies

To begin, we need to install Ollama, which allows us to run LLMs like LLaMA3 locally.

curl -fsSL https://ollama.com/install.sh | sh

ollama serve

ollama pull llama3.1

We'll also install necessary Python libraries like openai for interacting with our LLM and duckduckgo-search for performing web searches.

pip install openai duckduckgo-search

Creating an OpenAI Client for Local LLM Interaction

We'll establish a connection to our locally running LLM using the openai library.

from openai import OpenAI

client = OpenAI(api_key='Empty' ,

base_url='http://127.0.0.1:11434/v1')

This client will enable us to send prompts and receive responses from the LLM, effectively treating it as our language processing engine.

Implementing the Search Functionality

Our agent needs the ability to search the web for information. We define a search function that utilizes the duckduckgo-search library to query DuckDuckGo and retrieve relevant search results.

from duckduckgo_search import DDGS

def search(query , max_results=10):

results = DDGS().text(query, max_results=max_results)

return str("\n".join(str(results[i]) for i in range(len(results))))

These results will be used to augment the LLM's knowledge when necessary.

Defining Action and Input Extraction

To guide the agent's behavior, we need to understand its intended actions based on the LLM's output.

import re

def extract_action_and_input(text):

action_pattern = r"Action: (.+?)\n"

input_pattern = r"Action Input: \"(.+?)\""

action = re.findall(action_pattern, text)

action_input = re.findall(input_pattern, text)

return action, action_input

We define a function to extract the "Action" and "Action Input" from the LLM's response, allowing us to determine whether it intends to respond directly to the user or perform a web search.

Crafting the System Prompt

The system prompt, provides instructions to the LLM on how to behave. It outlines the available tools (in this case, web search) and the decision-making process for choosing between direct responses and utilizing the search tool.

System_prompt = """

Answer the following questions as best you can. Use web search only if necessary.

You have access to the following tool:

Search: useful for when you need to answer questions about current events or when you need specific information that you don't know. However, you should only use it if the user's query cannot be answered with general knowledge or a straightforward response.

You will receive a message from the human, then you should start a loop and do one of the following:

Option 1: Respond to the human directly

- If the user's question is simple, conversational, or can be answered with your existing knowledge, respond directly.

- If the user did not ask for a search or the question does not need updated or specific information, avoid using the search tool.

Use the following format when responding to the human:

Thought: Explain why a direct response is sufficient or why no search is needed.

Action: Response To Human

Action Input: "your response to the human, summarizing what you know or concluding the conversation"

Option 2: Use the search tool to answer the question.

- If you need more information to answer the question, or if the question is about a current event or specific knowledge that may not be in your training data, use the search tool.

- After receiving search results, decide whether you have enough information to answer the question or if another search is necessary.

Use the following format when using the search tool:

Thought: Explain why a search is needed or why additional searches are needed.

Action: Search

Action Input: "the input to the action, to be sent to the tool"

Remember to always decide carefully whether to search or respond directly. If you can answer the question without searching, always prefer responding directly.

"""

This prompt ensures the agent follows a logical workflow.

Building the Agent's Run Loop

The core of our agent lies in the run_agent function. This function manages the interaction loop between the user, the LLM, and the search tool.

def run_agent(prompt, system_prompt):

# Prepare the initial message

messages = [

{"role": "system", "content": system_prompt},

{"role": "user", "content": prompt}

]

previous_searches = set() # Track previous search queries to avoid repetition

while True:

response = client.chat.completions.create(

model="llama3.1",

messages=messages,

)

response_text = response.choices[0].message.content

print(response_text)

action, action_input = extract_action_and_input(response_text)

if not action or not action_input:

return "Failed to parse action or action input."

if action[-1] == "Search":

search_query = action_input[-1].strip()

# Check if the search query has been performed before

if search_query in previous_searches:

print("Repeated search detected. Stopping to prevent infinite loop.")

break

print(f"================ Performing search for: {search_query} ================")

observation = search(search_query)

print("================ Search completed !! ================")

previous_searches.add(search_query) # Add to the set of performed searches

messages.extend([

{ "role": "system", "content": response_text },

{ "role": "user", "content": f"Observation: {observation}" },

])

elif action[-1] == "Response To Human":

print(f"Response: {action_input[-1]}")

break

# Prevent infinite looping

if len(previous_searches) > 3:

print("Too many searches performed. Ending to prevent infinite loop.")

break

return action_input[-1]

It processes user prompts, sends them to the LLM, interprets the LLM's response, performs searches when needed, and ultimately provides a final answer to the user.

Illustrative Examples

Finally, we demonstrate the agent's capabilities with two examples.

The first showcases a simple conversational interaction where the agent responds directly to a greeting.

response = run_agent(prompt="Hi",

system_prompt=System_prompt)

The Output :

Thought: This is a greeting, which doesn't require any specific knowledge or information.

Action: Response To Human

Action Input: "Hello! How are you today?"

Response: Hello! How are you today?

The second example demonstrates a scenario where the agent needs to perform a web search to answer a question about the Ollama framework, highlighting its ability to leverage external knowledge sources.

response = run_agent(prompt="Tell Me About ollama framework",

system_prompt=System_prompt)

The Output :

Thought: Since I don't have prior knowledge about an "ollama" framework, I should do a search to find out more about it.

Action: Search

Action Input: "ollama framework"

================ Performing search for: ollama framework ================

================ Search completed !! ================

Thought: The search results provide sufficient information about the Ollama framework.

Action: Response To Human

Action Input: "The Ollama framework is an open-source tool designed for building and running large language models on a local machine. It provides a simple API for creating, running, and managing models, as well as a library of pre-built models that can be used in various applications. You can download Ollama from its official website and use it to run LLMs locally with a command-line interface on MacOS and Linux."

Response: The Ollama framework is an open-source tool designed for building and running large language models on a local machine. It provides a simple API for creating, running, and managing models, as well as a library of pre-built models that can be used in various applications. You can download Ollama from its official website and use it to run LLMs locally with a command-line interface on MacOS and Linux.

Building this simple search agent was a great way to learn the basics. But the real fun begins as we explore more advanced techniques and create agents that can handle even trickier tasks.

Happy Prompting ..